Evidence from Stakeholders

Approach to engagement

During spring/early summer 2024, OSR held semi-structured discussions with a range of key stakeholders to inform the approach to, and provide evidence for, this review. The topics for these discussions were informed by our existing regulatory work, but we also gave the opportunity for participants to share broader views.

In drawing conclusions from this evidence, we sought to triangulate the views of stakeholders, drawing together common themes from the discussions that we had. Many of these themes echo those that emerged from considering our previous assessments of individual economic statistics. We also tested these conclusions with ONS staff.

These discussions were followed by a call for evidence, which received a small number of responses, and further discussions with key stakeholders. Overall, we conducted around 25 interviews with external stakeholders.

We have also drawn on the views expressed set out in Independent Report on the 2025 UK Statistics Assembly. The Assembly was jointly organised by the UK Statistics Authority and the Royal Statistical Society (RSS) and held in January 2025. We have also drawn on our regular engagement with key users of economic statistics, including the Royal Statistical Society and Better Statistics.

The stakeholders we engaged with are listed in Annex 3.

Back to topWidespread support for ONS

Stakeholder discussions almost inevitably tend to focus on areas of concern. However, multiple stakeholders expressed strong support for ONS’s work. Particular emphasis was given to the general quality of the National Accounts, the confidence that most users have in ONS’s price statistics, methodological improvements (particularly the introduction of double deflation) and approval of the developments made in the use of real-time and other timely indictors, particularly during the COVID-19 pandemic. Stakeholders also noted major improvements in engagement with users of individual statistical outputs.

Back to topThe core purpose of economic statistics

Stakeholders regarded the core purpose of a national statistical institute as producing high-quality statistics, drawing on its natural advantages in data acquisition – its legal powers and reputation for impartiality and credibility. It was argued that researchers and academics would often be better placed to use statistics as inputs to analysis, particularly where high levels of assumption are required.

Against this core purpose, most stakeholders questioned whether ONS’s approach to allocating its resources is transparent and effective, and whether it had made best use of additional resources allocated following the Bean review.

But stakeholders had differing views as to the specific mix of outputs that should constitute ONS’s core activities. For example, some stakeholders further considered that too large a share of ONS resources has been allocated to outputs and activities that do not reflect a clear vision of the core purpose of a national statistical institute and add limited value. The independent report on the statistical assembly highlighted a need to identify key and vital economic statistics to prioritise, particularly when resources are constrained, with the aim of improving trustworthiness and reliability.

Similarly, several respondents highlighted monthly GDP as a statistic which, while high profile, is of uncertain quality and value. Others, however, expressed support for the statistic, arguing that once data are available for two months of a quarter, it is possible to predict the quarterly outturn with high accuracy.

Similarly, views were divided on the relative value of some of the work done as part of the “Beyond GDP” agenda, for example the production of statistics on inclusive income. Some stakeholders were strongly supportive of this work. Stakeholders who expressed reservations about it were not questioning the importance of wider measures of well-being and societal progress, but rather whether work in this area should be a high priority for ONS at present, given the fundamental methodological challenges and uncertainties, including in achieving international comparability. Their view was that this is an example of an area where work might, for the time being, be better taken forward in the academic sphere.

These differing stakeholder views led us to conclude that there is no single right answer as to what ONS should focus on. It is therefore crucial that it sets out a clear articulation of its priorities – what it has chosen to focus on and why.

Back to topSurvey response rates

Stakeholders repeatedly referred to the problems with the quality of key ONS outputs. The primary concern was with a deterioration in the quality of data sourced from household surveys. Reducing response rates to the Labour Force Survey (LFS) and the Living Costs and Food Survey were widely noted. Concerns were also expressed about the Wealth and Assets Survey and the Family Resources Survey (which is funded by the Department for Work and Pensions).

The strong consensus among stakeholders was that ONS has been slow to respond to falling response rates to household surveys and has not put in place sufficient mitigation measures quickly enough. Stakeholders also considered that ONS has not communicated effectively about emerging survey problems.

The independent report on the statistical assembly suggested more proactive research was needed on what influences response to surveys and what is an acceptable level of response.

Consistent with stakeholder concerns, there is certainly evidence across ONS household surveys of longer-term reductions in response rates, which, while accentuated by the pandemic, preceded that crisis.

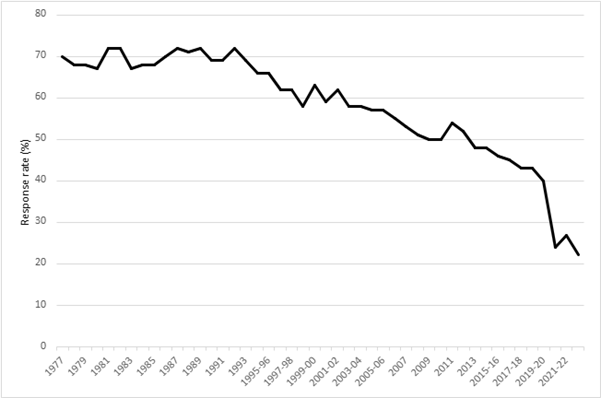

These reductions can be seen clearly with the Living Costs and Food Survey, for which a long run of data is shown in Chart 1. The decreasing trend in responses can be seen starting in the early 1990s.

Chart 1. Response rate for Living Costs and Food Survey.

Source: Office for National Statistics.

Additional analysis of response rates to other household surveys can be found in Annex 4.

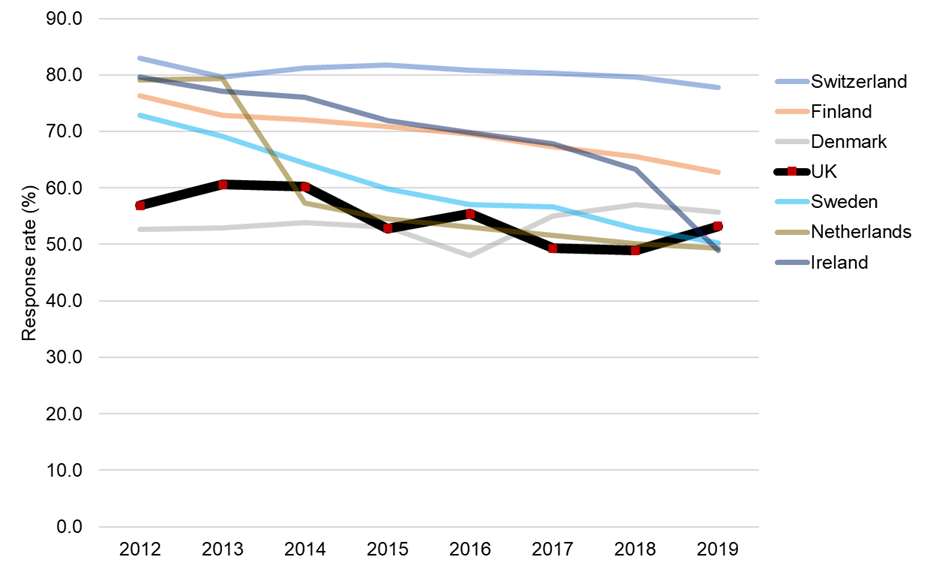

Falling response rates to voluntary household surveys are to some extent an international issue. In respect of the LFS, medium-term data for the period preceding the pandemic are shown in Chart 2. To support comparability, the chart includes countries in Western Europe and those where response to the survey is voluntary. Over the period shown, the UK LFS has had one of the lower levels of response.

Chart 2. EU-LFS response rates.

Source: Eurostat. Data may not be fully comparable across countries and may differ from the nationally reported results. The data should be treated as indicative.

The performance of mandatory business surveys provides a more positive picture:

- ONS has had notable success in moving its business survey collections online; around 2.1 million of the total 2.4 million questionnaires issued are now sent online. Prices data collection has been the latest to move to online capture, with plans to move more surveys online later this year.

- The response rate for monthly turnover in the Monthly Business Survey (MBS) was 83.6% in the most recent available quarter, quarter 4 2024. During the pandemic, the response rate dropped to a low of 79.2% in the equivalent quarter of 2020. Prior to the pandemic, response rates were 85.5% in the equivalent quarters in 2019, 85.1 in 2018, and 88.1% in 2017.

- ONS states that before the pandemic, the achieved sample size for the Annual Survey of Hours and Earnings (ASHE) was approximately 180,000 each year. During the pandemic, the final achieved sample sizes were 144,000 for 2020, 142,000 for 2021, and 148,000 for 2022. In 2023, the achieved sample was 164,000, and in 2024 it further improved to 173,000.

- Response rates for the Annual Business Survey (ABS) averaged 77.2% for the three years prior to the pandemic impacting responses (2016–2018). The response rate dropped to a low of 53.0% for the reporting year of 2019, recovering to 77% for 2023.

Data on the performance of mandatory annual business surveys are included in Annex 4.

The response rates to business surveys reflect substantial recovery towards pre-pandemic levels, and do not in themselves give rise to major concerns about the integrity of the economic statistics which draw upon them. However, stakeholders also raised concerns about the quality of business surveys beyond response rates, echoing our own regulatory work, which has identified challenges with sample reviews, rotation and quality assurance. These challenges indicate that ONS needs to make additional efforts to prevent the associated risks becoming a serious quality issue.

On business statistics, the independent report on the Statistics Assembly highlighted issues with, or missing from, official statistics. The issues included definition of market; measurement of innovation; metadata on mergers and acquisitions and firm restructuring; linked employer–employee data; the digital economy not being represented in product or industry; and longitudinal data collection on small businesses. The report also called for better methods to collect data, for example using administrative or more modern direct data collection from business systems, and suggested setting up a forum to conduct research and recommend a communication strategy to explain the importance of data collection to business owners and encourage their participation.

Given the issues that have evolved in household surveys, stakeholders also questioned the extent to which ONS has in place effective early warning systems for emerging quality risks, or how these concerns are best communicated to stakeholders so that action can be taken before risks materialise.

Back to topProgress on key Bean review recommendations

Stakeholders generally welcomed ONS’s progress on the Bean review recommendations, but most regarded it as slower than they would have expected and preferred.

Stakeholders also identified several mitigating factors:

- Many stakeholders accepted that the pandemic impacted progress and that ONS had appropriately diverted resources towards monitoring this impact. Most stakeholders thought ONS had done this very effectively, drawing on new data sources and real-time data.

- As the Lievesley review also concluded, stakeholders thought that the slow progress in the use of administrative data has been at least partly due to reasons outside ONS’s direct control. There was sometimes a low appetite for risk from other departments and limited resources due to ministerial outputs being prioritised over statistical production and sharing. The lack of harmonisation across complex security requirements has also inhibited cooperation.

Despite these challenges, stakeholders noted that there had also been positive developments, notably in respect of the use of VAT and PAYE data in the National Accounts.

Back to topA need for additional focus on administrative data

While noting this progress on the use of administrative data, stakeholders thought that increased efforts were needed. They emphasised the importance of administrative data and other big data sets to support quality in the context of survey challenges. They raised several areas of concern, including:

- how effectively ONS has exploited potential administrative data sources, and how well has it has used the opportunity to redesign existing surveys so that they complement administrative data, and whether ONS lacked a strategic approach to do this

- whether ONS has been sufficiently proactive in addressing barriers to the use of administrative data, and, in so far as barriers lie outside its control, whether further action to promote data sharing is required by other parts of government

- whether the Integrated Data Service (IDS) has distracted from a focus on acquiring and using administrative data in core economic statistics

Our data sharing and linkage for the public good follow-up report finds that these issues around data sharing are systemic and notes that there continues to be a failure to deliver on data sharing and linkage across government, alongside many persisting barriers to progress. It calls for stronger commitments to prioritise data sharing and linkage. Many of the issues here lie beyond ONS’s direct control.

Concerns about progress with the use of administrative data were also highlighted in the Independent Report on the 2025 UK Statistics Assembly. Based on engagement with stakeholders during the Assembly, the report identified as a priority the need for the Statistics Authority and Government Statistical Service to take a leadership position in a significant scaling up in the use of administrative data across government. The report also identified an immediate need for official statisticians to be more engaged in the design of digital data architectures for administrative data from diverse sources. The report suggested that a cross-Government Statistical Service group could make a start on this, building on and coordinating existing departmental efforts to enhance standardisation and facilitate more sharing.

Back to topMeasuring a changing economy

In the view of several key stakeholders, ONS had made virtually no progress on the Bean recommendations to improve measurement of the modern economy, particularly where digital technologies are involved. Specific major gaps included the coverage of:

- internet trade/platforms, including for retail trade

- digital services, including uncharged ones

- open-source and its own account software and cloud computing

The same stakeholders argued that other departments are making more progress than ONS in this area. For instance, the Department for Science, Innovation and Technology has introduced a survey on the adoption of AI, in part to replace data from the relatively new, but discontinued, ONS Digital Economy Survey. ONS has noted that the latter survey was discontinued due to budget pressures, and it has asked some questions as part of other surveys.

The independent report on the Statistics Assembly noted a need to revive the e-commerce survey to include modules on AI use, cloud computing, software development and robotics.

Several stakeholders also noted a lack of granularity in the coverage of the digital economy, which extends to other parts of the service sector (notwithstanding the introduction of the new Annual Survey of Goods and Services).

Back to topThe role of research

Several stakeholders doubted whether the institutional and organisational changes that followed the Bean review (including, to varying degrees, the creation of the Economic Statistics Centre of Excellence (ESCoE) and the Data Science Campus) and the employment of more economists had effectively and consistently translated into improvements in core ONS outputs.

Noted contributions by ESCoE to improvements in ONS economic statistics included:

- advice on the implementation of double deflation, on the incorporation of VAT data in estimates of GDP and on improved estimates of prices for telecommunications services

- advice on the development of the methodology for incorporating supermarket scanner data in inflation statistics

- the incorporation of new sources of data into rental prices and the development of surveys business management practices and the implications for growth

Some wider and longer-term benefits from ESCoE’s activities, for instance in respect of measuring the “green economy” and improving the communication of uncertainty about economic statistics, were also noted. In addition, it was acknowledged that ESCoE has successfully filled the institutional space between producers of official statistics and academia (bringing them together) that is largely unoccupied in many countries, and by doing so has helped develop the next generation of academic experts.

Some stakeholders noted that more recently, they had observed a lack of curiosity, particularly in ONS’s understanding and explanation of the coherence between different sets of economic statistics (for example, the various statistics on household income, and at a macro level, the differing picture of the size of the working population provided by ONS’s population statistics and its labour market statistics).

We heard that research engagement with ONS has sometimes been inconsistent, perhaps reflecting resource issues and frequently changing research priorities within ONS, leading to a focus on the short term. In addition, it was noted that challenges have arisen within ONS from high staff turnover, loss of expertise, a lack of appropriate career pathways and frequent job rotation within ONS (and the wider Civil Service). Staffing issues may have disrupted longer-term collaboration and eroded institutional memory. These issues will be investigated further as part of our follow-up work to this review.

Back to top