Part 1: Planning a model that serves the public good

Models present many opportunities for developing and improving the production of statistics and data, while also assisting decision making and predictive modelling. However, there are risks associated with introducing a new model or making changes to a current one. Whichever you are doing, it’s important not to do so without full consideration of the impacts, both the immediate impacts (from the model itself) and the impacts further down the chain (e.g if the outputs are used in other models).

Before developing, using, or changing a model, you should take the necessary steps to appropriately plan and carefully consider whether your planned approach is right for its intended use.

Take time to consider the following:

What is the question you are trying to answer?

Firstly, it’s important to understand the question you are trying to answer and what you hope the product will be. This will allow you to go out and collect the resources you need to be able to answer the question effectively. Starting with the question helps to keep focus on the aim as you move through model planning and development and make sure you are providing the value that you intended. On the flip side, starting with the resources (e.g. data) and trying to get them to ‘fit’ to the product that you want is likely not to provide the value you hoped. A model might not always be the best way to answer a question. The following questions can help your thinking:

- what are your motivations for building or changing a model?

- can your intended approach directly meet the aim of the work?

- are there alternatives that also need to be considered? (e.g. is a model the only way to answer the question?)

- are you adding value to the topic area?

- what is the deadline of the project? Do you have time to consider all aspects of TQV before your model needs to be deployed?

After considering the above, your aims should be stated upfront and regularly assessed throughout its development. When you can foresee tension between the aims and potential uncontrollable factors (e.g. time pressures), be clear that those tensions exist, what those tensions are and what impacts they may have.

Checklist

- After considering your question and desired product, the chosen approach is appropriate and warranted.

- Time has been put aside to regularly assess aims and return to your question.

- Any foreseen tensions between aims and uncontrollable factors have been clearly communicated and are expected.

Pillars in Practice: Meeting the diverse needs of users

Let’s say you are thinking of building a new model to predict house prices for the month ahead. Your model replaces an old process. There are 3 types of users for your model:

- An analyst wishing to use the model for an innovative housing project.

- A statistics producer who is reliant on your model’s outputs to feed into their statistics

- A homeowner who your output will affect

All these users will be looking at the model from a different lens and will have different requirements, concerns and thoughts. The analyst is likely be keen for the model to be developed as there is little risk and a lot of benefit to them, however the statistics producer is likely to be more cautious and may have more specific requirements that will allow the model to work for their statistics. The homeowner may have concern around the lack of certainty of a new approach and what that means to them when selling their house. Each users’ thoughts are valid and missing out engagement on even one user type could leave your model vulnerable to mistrust.

What is the user need?

Users should be at the heart of any decision to develop a model or change a process they rely on by introducing one. Models can provide opportunities to address user needs in ways which may not have been possible before such as helping to fill data gaps and, if used in a current process, can also mean an increase in quality for users.

However, it should never be assumed that a model is the best approach and engagement with a wide range of users should always be sought before any decision is made. After this engagement, you should be open and transparent about your intended approach and clearly communicate any benefits and potential risks or trade-offs associated with your model. It is important to be realistic in model expectations when speaking to the user, especially about the scope and ability you expect the model to have.

Checklist

- A range of users have been engaged with and their requirements have been considered.

What ethical and legal issues do you need to consider?

This section has been written in collaboration with our colleagues in the UK Statistics Authority’s (UKSA) Centre for Applied Data Ethics.

Issues of data quality, methodological limitations, transparency, bias, and fairness all relate to ethical issues that should be carefully considered during project design. There are also legal considerations, particularly around data protection. Although we, at OSR, don’t create ethical or legal guidance ourselves we would like to emphasise how important these considerations are and signpost readers to relevant additional material.

The UK Statistics Authority ethical principles identify six key areas that should be explicitly considered to enable the ethical use of data. Models are no exception. These principles include public good, transparency, methods and quality, confidentiality and data security, legal compliance, and public views and engagement. When considering the use of a model, these ethical principles should be considered to ensure that the use of such techniques is ethically appropriate. An ethics self-assessment form can also be completed to assist in this process.

There are also important legal considerations when using data to build models. Depending on whether you are a data controller or a data processor, you will need to consider what context you are using the data in. For example, under the General Data Protection Regulation (GDPR), data controllers must consider the rights of data subjects which may include ensuring transparency of models built using their data. Data processors can only process the data as allowed by the data controller and therefore when exploring model capabilities it is important to not go beyond this. The Information Commissioners Office (ICO) has some useful guidance on AI and data protection.

Finally, fairness for all is a major ethical consideration which we’d like to highlight specifically. No model should be built so that the output is in any way unfair or discriminatory to a certain group or individual. This is where human oversight becomes essential as fairness as a concept is not something a model is capable of identifying itself.

Checklist

- For models using personal data. The data subject’s identity (whether person or organisation) is protected, information is kept confidential and secure, and the issue of consent is considered appropriately. No data subject should be unfairly disadvantaged by the model.

- The model and data used in the model are consistent with legal requirements such as Data Protection Legislation, the Human Rights Act 1998, the Statistics Registration and Service Act 2007 and the common law duty of confidence.

- When working with data you are clear what legal role you have in its use and comply with the requirements under the GDPR.

- Safeguards have been put in place to allow checks for model fairness.

Are the roles and responsibilities clear?

Within statistics the Chief Statistician, Head of Profession, or those with equivalent responsibility, should have sole authority for deciding on methods used for published statistics in their organisation. This chain of accountability should be extended to models so it is clear who is responsible for what aspect of its development and upkeep and who will be ultimately responsible for errors of judgement. This is particularly important for models where accountability of processes and outputs is necessary for data protection and in gaining trust.

An appropriately chosen individual (Chief Statistician, Head of Profession, or other ‘accountable officer’) should be responsible for any statistics, output or data created by the model.

This means the accountable officer is aware of the methodological choices that have been taken in designing the model and those that sit underneath them understand their role in making design decisions clear to the accountable officer. They should also be aware of the methods used for validating the approach and any potential limitations and risks of the model. A chain of model accountability allows anyone involved in the project to know who to go to if something goes wrong or an error is identified. Should any harm be caused by the outcome of the model, the chain of accountability helps the accountable officer identify the source of the issue and who is legally responsible for the consequences of such harm.

When a model is developed by an external partner, the chain of accountability should be established before model development is agreed. This scenario can make accountability less clear as the team developing the model may be different to the team implementing and/or maintaining the model. The developing team have a responsibility to effectively communicate all aspects of the model to the implementing team with particular emphasis on what the model should be used for, its risks and limitations. The implementing team have the responsibility to question and fully understand those methods, risks and limitations for the context in which they are implementing the model. Furthermore, when the relationship between the developing and implementing teams has ended, it is important the implementing team know enough and are skilled enough to maintain and monitor the model.

Contingency should always be built into the chain of accountability whereby if one team member leaves, another member understands enough about the model to take on their role. This is to avoid a situation where the team does not have the relevant skills to continue using the model for its intended purpose. This would have a detrimental impact on the trustworthiness and quality of the outputs and should be a considered when planning and designing a model.

For more information on roles and responsibilities in a project lifecycle please see HM Treasury’s Aqua Book.

Checklist

- A clear chain of model accountability has been established with clear roles and responsibilities at every level, including the role of the senior leader.

If the developing and implementing teams are the same:

- There is clarity of roles and sufficient resilience and skill within the team to build and manage the ongoing maintenance of the model

If the developing and implementing teams are different:

- Handover of knowledge, risks and limitations is given full priority when it is needed, and the skills needed to maintain the model are considered prior to handover.

Pillars in Practice: Building strong links in the chain

A statistics production team (Team A) asks a data science team (Team B) to build them a model to track the cost of leisure activities over the next 5 years. They intend to use the model to feed into their statistical output.

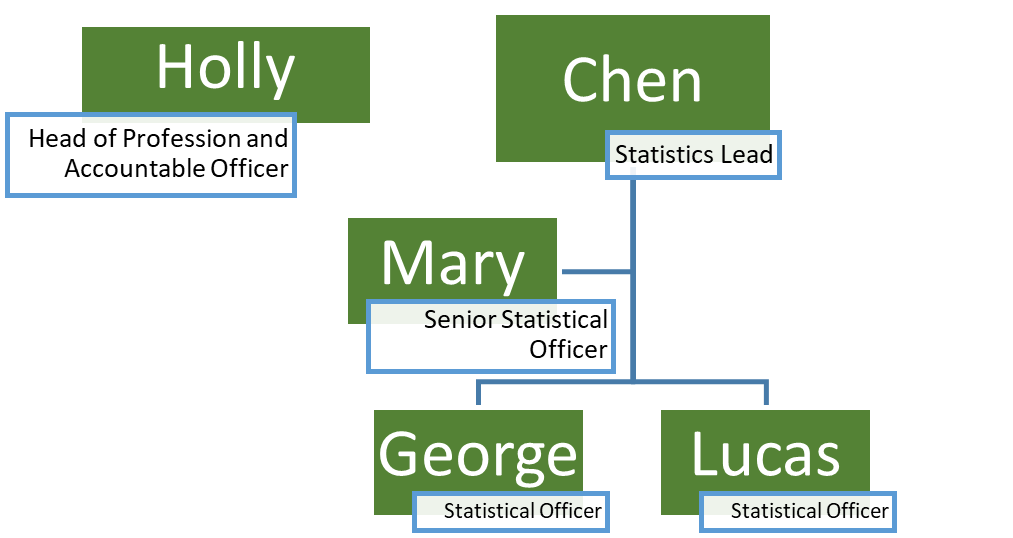

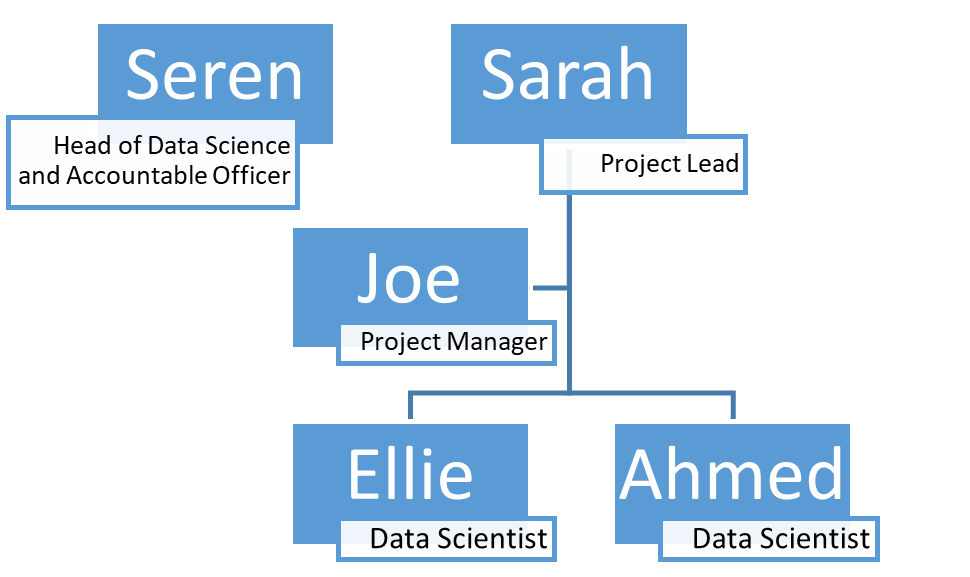

In this case, Team A is the implementing team and Team B is the developing team. You can see how they are structured below.

Team A

Holly – Head of Profession and Accountable Officer

- Chen – Statistics Lead

- Mary – Senior Statistical Officer

- George – Statistical Officer

- Lucas – Statistical Officer

- Mary – Senior Statistical Officer

Team B

Seren – Head of Data Science and Accountable Officer

- Sarah – Project Lead

- Joe – Project Manager

- Ellie – Data Scientist

- Ahmed – Data Scientist

- Joe – Project Manager

Ellie and Ahmed design the model and feed their designs to Sarah for feedback. They then develop the model and feed their progress back up the chain to Joe and Sarah. Mary has been assigned as liaison for Team A so Joe regularly feeds back progress on the model to Mary. When the model is designed, Team B feedback their design decisions to Seren, the accountable officer, who can challenge the model design and assumptions. When Team B is ready to handover the model to Team A, all members of both teams meet to ensure all information is passed to the right people and training is provided by Team B. Holly is also able to challenge and understand decisions made in model design and development. The model is deployed by Team A but a dialogue remains between Mary and Joe to ensure knowledge stays up to date

This is one example of how two teams can work together to build trust and transparency when building a model.

Does your team have the right skills?

An important consideration in adopting any model is to assess whether the responsible team is sufficiently skilled. Some data science modelling techniques, in particular machine learning and artificial intelligence (AI), require a different skillset from traditional modelling techniques such as linear regressions. Likewise, knowledge of the skills required for more traditional modelling techniques are needed to avoid the potentially unnecessary use of more complex techniques. It is important to consider whether there is currently enough experience and knowledge in your team to ensure the expected standards are met. If not, consider investing time to train your team as it helps to develop skills and promotes innovation and improvement to current practices beyond just the model itself. Investment in the team also helps boost morale and contributes to career paths. If this isn’t possible then specialist resource may need to be brought in from outside your team.

It is important to note that the team must have the appropriate knowledge and skills to manage both the implementation and maintenance of the model. In some cases, it may be advantageous to use external resource to build a model but if there is not enough knowledge and expertise to maintain the model (e.g. updating outdated data) then the model is unlikely to provide long term quality outputs.

Individuals may want to consider joining a relevant skills related profession or seek out accreditation to further build trust in their skills and knowledge.

Checklist

The team:

- has the knowledge and skills needed to develop the model (if appropriate).

- has the knowledge and skills needed to manage and maintain the model.

- has the knowledge and skills needed to successfully communicate the model and its outputs.

- has access to continuous development and learning.

-

The benefits of building capability beyond the current work have been considered.

Is resource sufficiently prioritised?

Building a model can be resource intensive especially tasks such as data cleaning and model testing but this upfront allocation of resource can also lead to efficiencies in the longer term.

What’s important is that any resource required to develop and maintain a model should be proportionate to the benefits and value arising from its use.

Models very often require not just upfront resource to build but also ongoing resource to maintain which is regularly underestimated when calculating resource needed. If this is the case with your model, it’s necessary to consult your organisation’s overall development plan and whether you believe these types of models will continue to be prioritised into the future. Implementing a model which does not have the resource to be maintained correctly could, depending on its use case, lead to more harm than good.

If you need to take resource from elsewhere, it is important to be open and clear about your plans and any re-prioritisation should be made clear to users and stakeholders.

Checklist

- The amount of resource required to implement the model has been estimated.

- The benefits outweigh the investment in resources to set it up and maintain.

- The required resource can be allocated to meet the project aims.

- Any reprioritisation required has been communicated.