The steps to be taken by statistical producers need to go beyond a narrow interpretation of ‘quality assurance’; they should also encompass the working arrangements and relationships with other agents, particularly data supply partners.

These working arrangements can range from straightforward data transfers from a single data supplier, through to more complex large-scale systems.

In addition some official statistics are based on administrative data provided by another statistical producer body.

For statistics based on administrative data obtained from outside the Government Statistical Service some examples include:

- Directly from the organisation that records the data

- Via intermediary organisations

- Another government department or official statistics producer body

Directly from the organisation that records the data

In this case the statistical producer should engage with this organisation directly and take into consideration the detail and nature of information received from the data supplier when deciding on appropriate quality assurance.

The responsibility for producing information about the quality of the statistics lies with the producer. In the case of large numbers of direct suppliers, the statistical producer might explore other ways of engaging, such as holding meetings to discuss common quality issues and liaising with information governance groups.

Via intermediary organisations

For example local authorities collect data and pass these to the producer – in this case the statistical producer should:

1) have an understanding of the entire data cycle and the quality assurance processes carried out by the original data supplier

2) engage with intermediary organisations to understand their quality assurance processes and standards.

Administrative data collected by another government department or official statistics producer body

In this case both the statistical producer and the supplier department have a responsibility to understand and communicate about the quality of the data.

The producer with responsibility for publishing the statistics has the ultimate responsibility for assuring itself about the quality of the underlying data and communicating this to users.

However the statistical Head of Profession for the supplier partner body has a responsibility to ensure that it shares its quality assurance information with the statistical producers, including clear information about any limitations or bias in the data, and takes action to address any queries or concerns raised by the statistical producer.

In cases where producer bodies share data for statistical purposes, or both work with the same data supply partners, producers should work together to develop a better understanding of the quality of the administrative data, sharing intelligence and insight about the data with each other and with users.

Data Suppliers’ QA Arrangements Example:

NHS England’s Ambulance Quality Indicators (AQI)

Two sets of indicators are included in AQI statistics: the ambulance systems indicators and ambulance clinical outcomes. The clinical outcomes require a three-month period of follow up with acute hospital trusts to see if health outcomes occur (for example, allowing time to see whether patients recover following a cardiac arrest).

Ambulance Services are required to meet the following target:

- All ambulance trusts to respond to 75 per cent of Category A calls within eight minutes and to respond to 95 per cent of Category A calls within 19 minutes of a request being made for a fully equipped ambulance vehicle (car or ambulance) able to transport the patient in a clinically safe manner

The indicators of particular interest to the ambulance trusts include further breakdowns of the ‘Category A’ calls into two categories, and four clinical outcomes:

- The proportion of Red 1 (‘most urgent’) calls resulting in an emergency response responded to within 8 minutes

- The proportion of Red 2 (‘serious but less time critical’) calls resulting in an emergency response responded to within 8 minutes

The two sets of AQI statistics enable users, including the Ambulance Services, to benchmark performance across the service and to encourage good performance. The AQIs also allows NHS managers to determine the variance between service providers and this is used as the basis for improving poor performance.

NHS England describes the arrangements for quality assuring the AQI data in its quality statement. These measures include validation of data as during loading into the unify2 data collection system, and quality assurance by the Ambulance Services themselves.

Evidence from Ambulance Services

Ambulance Services can be fined for failing to meet the standards in the Handbook to the NHS Constitution, creating an incentive to ensure reported performance is maintained. NHS England says that part of its confidence in the reliability of the data is due to the fact that, in at least one month in the first half of 2014, every single Ambulance Service reported that it had missed the 75% target for either Red 1 or Red 2. In addition, four Services reported they had missed the Red 2 target for 2013-14 as a whole.

The Association of Ambulance Chief Executives (AACE) told NHS England that they completed an informal internal audit in 2014. AACE found that Ambulance Services had a range of data quality measures in place, with a few examples of particularly good practice; no governance arrangements were found to be weak. AACE said that it had no concerns over general misreporting although it did find that some AQI measures needed tighter definition in order to ensure consistent reporting. It plans to establish a small group of control managers and informatics staff to work on reaching a more consistent understanding in respect of those measures.

The National Ambulance Service Clinical Quality Group (NASCQG) which is responsible for the consistent measurement of the clinical outcomes (CO), organised a benchmarking day in 2014, where all Ambulance Services mapped and compared their CO data collection processes. NASCQG collated the information into a paper for the National Ambulance Services Medical Directors Group and for AACE. NASCQG are also developing a programme of peer-to-peer review, where Services visit each other to harmonise their data systems.

The individual Ambulance Services publish their Board papers, with Integrated Performance reports which show the trends in their performance measures and including the response distribution by time.

Ambulance Services’ Quality Accounts and the audit of Foundation Trusts

As the Department of Health requires for all providers, Ambulance Services compile Quality Accounts, including the information specified in the schedule to The National Health Service (Quality Accounts) Regulations 2010. The Services submit their Quality Accounts to the NHS Choices website by June 30, and make them publicly available.

The Quality Accounts schedule includes a self-assessment against the HSCIC’s Information Governance Toolkit (IGT). In January 2015, the latest HSCIC data showed that all Services assessed themselves as satisfactory against the toolkit. The toolkit chiefly relates to data protection and security, but one of the five sections concerns clinical information assurance. Again, all Services assessed themselves as satisfactory.

Monitor ensures the Foundation Trusts (FTs) are well-governed. It is the responsibility of each FT’s Board to put processes and structures in place to ensure its national data returns are accurate. If it came to light that data returns were not accurate, Monitor would consider whether the Trust is in breach of its licence, although Monitor does not have the mandate to audit FT performance data. Monitor requires the auditors of all FTs to undertake a review of the content contained within the quality report. The audit have to report on whether there is evidence to suggest that they have not been reasonably stated in all material respects in accordance with the NHS Foundation Trust Annual Reporting Manual and the six dimensions of data quality set out in the Detailed Guidance for External Assurance on Quality Reports.

In 2013-14, for the five Ambulance Services that are FTs, Monitor requires the audit to include data for the 8 minute and 19 minute standards in the Handbook to the NHS Constitution. The Quality Accounts show that Price Waterhouse Coopers reviewed the North East and South West Ambulance Services; KPMG reviewed South Central, and Grant Thornton reviewed West Midlands and South East Coast Ambulance Services. Each auditor agreed there was no such evidence.

For non-Foundation Trusts, the NHS Trust Development Authority (TDA) is responsible for providing assurance that Trusts have effective arrangements in place that enable them to record data accurately. NHS England says that it is in regular contact with the TDA in order to stay up to date with their findings on the six Ambulance Services that are not FTs.

The Care Quality Commission (CQC) is responsible for inspecting all NHS Trusts, and in 2014 it published “A fresh start for the regulation of ambulance services”. CQC use the AQIs on the NHS England website as intelligence when preparing for inspections, and would contact Ambulance Services to query any data that looked obviously wrong. CQC inspections of Ambulance Services, including Emergency Operations Centres, would investigate any concerns they had in CQC’s evidence base, and any concerns that came to light during an inspection. That could, but would not necessarily, include how the Ambulance Service records and stores data, and how data are reported to management and outside the Trust. In order to keep up to date with CQC intelligence, NHS England receives alerts each time a new Ambulance Service inspection is published.

A check on potential gaming – the distribution of Category A measures

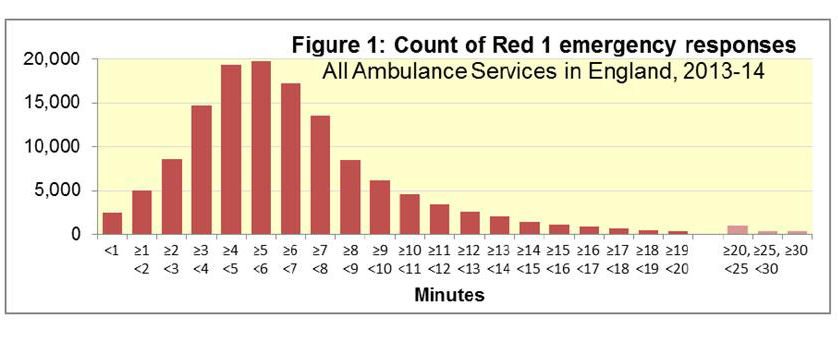

In 2003, the Commission for Health Improvement described the fact that for some Ambulance Services, response times had an unlikely distribution, increasing to a sharp peak just before 8 minutes, and with very small counts of response times after 8 minutes, suggesting some misreporting of times by Ambulance Services.

Each Ambulance Service supplied NHS England with a data submission showing the distribution of calls for service by time to respond for 2013-14. Figure 1 shows that the frequency follows a normal distribution, with no peak at the target threshold of 8 minutes. NHS England compared these data with the regular data supplied for the AQI, and confirmed they were consistent. NHS England says that it will ask providers to annually refresh the data, to check that this pattern remains true.